Despite artificial intelligence (AI) becoming more advanced, machine learning processes may still require understanding and control to fully ensure that the results of AI models are accurate. From predictive maintenance to intelligent supply chain management, AI algorithms analyse vast datasets in real-time, enabling manufacturers to optimize energy usage and conserve valuable resources. This is where it becomes crucial to differentiate AI from explainable artificial intelligence (XAI).

In the manufacturing context, explainable AI enables stakeholders to understand how AI algorithms arrive at specific recommendations or actions, fostering trust and facilitating collaboration between humans and machines. By demystifying the inner workings of AI models, manufacturers can identify potential biases, mitigate risks, and fine-tune processes for optimal performance.

This is the fourth article of our article series “Closer look on…” where we highlight how AI not only revolutionizes energy consumption and resource conservation but also explore the realm of explainable AI to unlock additional cost-saving potentials and how it is implemented within the MARS project.

Advantage of AI in manufacturing

Machine learning (ML) models can identify patterns and structures in data to make predictions without being directly programmed to do so. These models can be a useful tool in forecasting the final quality of a workpiece at the end of a manufacturing process, helping to improve both the efficiency and the reliability of it.

How explainable AI can save further costs?

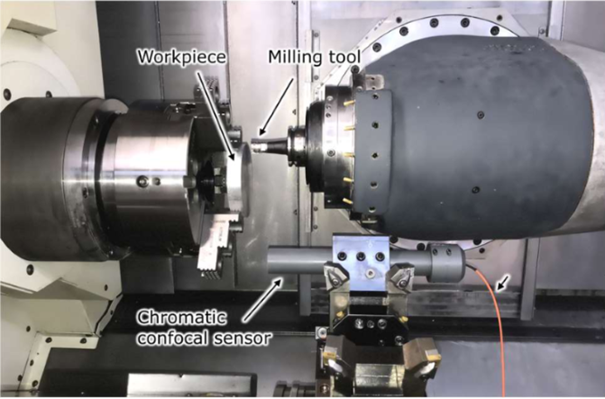

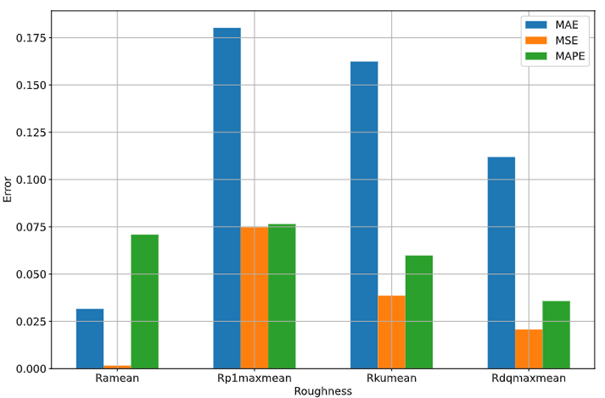

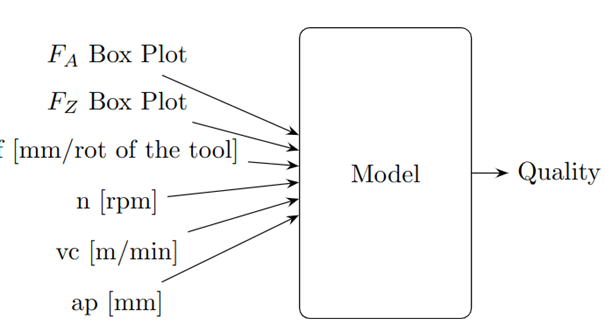

The case study produced by our coordinator ENSAM and our consortium partner Simula Research Laboratory, demonstrates the advantages of using explainable ML techniques in the context of surface roughness quality prediction models within the manufacturing sector. AI models for surface roughness prediction usually exhibit a very non-linear correlation and a difficult generalization for other types or processes, machines, and tool geometries. However, by utilizing explainability scores derived from feature importance analyses, we can interpret the model predictions more effectively. This approach enables the identification and assessment of the significance of individual features in contributing to the overall predictive accuracy of the model. For economic reasons, this allows us to remove sensors from the manufacturing machines while maintaining and even improving predictive accuracy for certain surface roughness values. Additionally, utilizing these ML models as digital twins for the corresponding physical machinery opens new avenues for employing parameter optimization methods.

This study applies explainable machine learning to predict milling surface roughness, utilizing random forest regression and feature permutation importance. Key achievements include the creation of an accurate predictive model and the identification of non-essential sensors. Eliminating these redundant sensors, especially those measuring normal cutting force, enhances the model’s cost-effectiveness without sacrificing accuracy. This work exemplifies how explainable ML can optimize manufacturing processes, combining improved performance with economic efficiency.

Explainable AI within the MARS project

In the MARS project, multiple AI models will be developed for different purposes. For instance, models for suggesting the optimal cutting condition, model for prediction of quality of manufactured part with process self-correction, model for monitoring the overall manufacturing process health, and cause of failure models with emphasis on explainable AI. By incorporation of methods like root-cause analysis and feature importance of models, transparency and understanding of the decision-making process will be enhanced throughout the development of all AI models in the project.

The project also focuses on developing neuro-symbolic constraint models for automatic process planning which will be 100% necessary for the proposition of the DFMS for distribution of manufacturing tasks over a geographically distributed network of manufacturing platforms. By combining logic-based, symbolic constraint models with data-driven machine learning, the methodology aims to capture both hard constraints and parameter uncertainties inherent in manufacturing processes. This approach enhances interpretability and explainability by integrating logical rules with historical data-driven insights. Through collaboration between SRL and AIN, constraint-based models for process planning will be explored and combined with AI techniques, with a focus on supervision and modification of AI models to ensure alignment with manufacturing requirements.

Conclusion

Overall, the MARS project represents a pioneering effort in utilizing AI to master the efficiency of manufacturing processes through zero defect concept while ensuring transparency and interpretability through explainable AI methodologies.

The integration of explainable AI represents a paradigm shift, because differently from common AI models that support decision-making, XAI can provide stakeholders, like manufacturers, practitioners, or managers, with information that shows why the AI model is selecting a decision. In addition to that, XAI can also identify whenever a variable is not necessary as input for the model, which might represent the non-necessity of implementation of external (and eventually expensive) sensors to the manufacturing platform. The MARS project aims to embrace these transformative technologies so that European manufacturers can not only thrive in an increasingly competitive market but also contribute to a more sustainable and resilient future for generations to come within the European manufacturing industry.